How-To Geek

How to tell if an article was written by chatgpt.

Quick Links

How to tell if chatgpt wrote that article, can you use ai to detect ai-generated text, tools to check if an article was written by chatgpt, train your brain to catch ai.

You can tell a ChatGPT-written article by its simple, repetitive structure and its tendency to make logical and factual errors. Some tools are available for automatically detecting AI-generated text, but they are prone to false positives.

AI technology is changing what we see online and how we interact with the world. From a Midjourney photo of the Pope in a puffer coat to language learning models like ChatGPT, artificial intelligence is working its way into our lives.

The more sinister uses of AI tech, like a political disinformation campaign blasting out fake articles, mean we need to educate ourselves enough to spot the fakes. So how can you tell if an article is actually AI generated text?

Multiple methods and tools currently exist to help determine whether the article you're reading was written by a robot. Not all of them are 100% reliable, and they can deliver false positives, but they do offer a starting point.

One big marker of human-written text, at least for now, is randomness. While people will write using different styles and slang and often make typos, AI language models very rarely make those kinds of mistakes. According to MIT Technology Review , "human-written text is riddled with typos and is incredibly variable," while AI generated text models like ChatGPT are much better at creating typo-less text. Of course, a good copy editor will have the same effect, so you have to watch for more than just correct spelling.

Another indicator is punctuation patterns. Humans will use punctuation more randomly than an AI model might. AI generated text also usually contains more connector words like "the," "it," or "is" instead of larger more rarely used words because large language models operate by predicting what word will is most likely to come next, not coming up with something that would sound good the way a human might.

This is visible in ChatGPT's response to one of the stock questions on OpenAI's website. When asked, "Can you explain quantum computing in simple terms," you get sentences like: "What makes qubits special is that they can exist in multiple states at the same time, thanks to a property called superposition. It's like a qubit can be both a 0 and a 1 simultaneously. "

Short, simple connecting words are regularly used, the sentences are all a similar length, and paragraphs all follow a similar structure. The end result is writing that sounds and feels a bit robotic.

Large language models themselves can be trained to spot AI generated writing. Training the system on two sets of text --- one written by AI and the other written by people --- can theoretically teach the model to recognize and detect AI writing like ChatGPT.

Researchers are also working on watermarking methods to detect AI articles and text. Tom Goldstein, who teaches computer science at the University of Maryland, is working on a way to build watermarks into AI language models in the hope that it can help detect machine-generated writing even if it's good enough to mimic human randomness.

Invisible to the naked eye, the watermark would be detectable by an algorithm, which would indicate it as either human or AI generated depending on how often it adhered to or broke the watermarking rules. Unfortunately, this method hasn't tested so well on later models of ChatGPT.

You can find multiple copy-and-paste tools online to help you check whether an article is AI generated. Many of them use language models to scan the text, including ChatGPT-4 itself.

Undetectable AI , for example, markets itself as a tool to make your AI writing indistinguishable from a human's. Copy and paste the text into its window and the program checks it against results from other AI detection tools like GPTZero to assign it a likelihood score --- it basically checks whether eight other AI detectors would think your text was written by a robot.

Originality is another tool, geared toward large publishers and content producers. It claims to be more accurate than others on the market and uses ChatGPT-4 to help detect text written by AI. Other popular checking tools include:

Most of these tools give you a percentage value, like 96% human and 4% AI, to determine how likely it is that the text was written by a human. If the score is 40-50% AI or higher, it's likely the piece was AI-generated.

While developers are working to make these tools better at detecting AI generated text, none of them are totally accurate and can falsely flag human content as AI generated. There's also concern that since large language models like GPT-4 are improving so quickly, detection models are constantly playing catchup.

Related: Can ChatGPT Write Essays: Is Using AI to Write Essays a Good Idea?

In addition to using tools, you can train yourself to catch AI generated content. It takes practice, but over time you can get better at it.

Daphne Ippolito, a senior research scientist at Google's AI division Google Brain, made a game called Real Or Fake Text (ROFT) that can help you separate human sentences from robotic ones by gradually training you to notice when a sentence doesn't quite look right.

One common marker of AI text, according to Ippolito, is nonsensical statements like "it takes two hours to make a cup of coffee." Ippolito's game is largely focused on helping people detect those kinds of errors. In fact, there have been multiple instances of an AI writing program stating inaccurate facts with total confidence --- you probably shouldn't ask it to do your math assignment , either, as it doesn't seem to handle numerical calculations very well.

Right now, these are the best detection methods we have to catch text written by an AI program. Language models are getting better at a speed that renders current detection methods outdated pretty quickly, however, leaving us in, as Melissa Heikkilä writes for MIT Technology Review, an arms race.

Related: How to Fact-Check ChatGPT With Bing AI Chat

- Cutting Edge

- AI & Machine Learning

- PRO Courses Guides New Tech Help Pro Expert Videos About wikiHow Pro Upgrade Sign In

- EDIT Edit this Article

- EXPLORE Tech Help Pro About Us Random Article Quizzes Request a New Article Community Dashboard This Or That Game Forums Popular Categories Arts and Entertainment Artwork Books Movies Computers and Electronics Computers Phone Skills Technology Hacks Health Men's Health Mental Health Women's Health Relationships Dating Love Relationship Issues Hobbies and Crafts Crafts Drawing Games Education & Communication Communication Skills Personal Development Studying Personal Care and Style Fashion Hair Care Personal Hygiene Youth Personal Care School Stuff Dating All Categories Arts and Entertainment Finance and Business Home and Garden Relationship Quizzes Cars & Other Vehicles Food and Entertaining Personal Care and Style Sports and Fitness Computers and Electronics Health Pets and Animals Travel Education & Communication Hobbies and Crafts Philosophy and Religion Work World Family Life Holidays and Traditions Relationships Youth

- Browse Articles

- Learn Something New

- Quizzes Hot

- Happiness Hub

- This Or That Game

- Train Your Brain

- Explore More

- Support wikiHow

- About wikiHow

- Log in / Sign up

- Computers and Electronics

- Online Communications

Check if Something Was Written by ChatGPT: AI Detection Guide

Last Updated: June 25, 2024 Fact Checked

How AI Detection Tools Work

Using ai detection tools, signs of chatgpt use.

This article was reviewed by Stan Kats and by wikiHow staff writer, Nicole Levine, MFA . Stan Kats is a Professional Technologist and the COO and Chief Technologist for The STG IT Consulting Group in West Hollywood, California. Stan provides comprehensive technology solutions to businesses through managed IT services, and for individuals through his consumer service business, Stan's Tech Garage. Stan holds a BA in International Relations from The University of Southern California. He began his career working in the Fortune 500 IT world. Stan founded his companies to offer an enterprise-level of expertise for small businesses and individuals. There are 12 references cited in this article, which can be found at the bottom of the page. This article has been fact-checked, ensuring the accuracy of any cited facts and confirming the authority of its sources. This article has been viewed 60,530 times.

With the rising popularity of ChatGPT, Bard, and other AI chatbots, it can be hard to tell whether a piece of writing was created by a human or AI. There are many AI detection tools available, but the truth is, many of these tools can produce both false-positive and false-negative results in essays, articles, cover letters, and other content. Fortunately, there are still reliable ways to tell whether a piece of writing was generated by ChatGPT or written by a human. This wikiHow article will cover the best AI detection tools for teachers, students, and other curious users, and provide helpful tricks for spotting AI-written content by sight.

Things You Should Know

- Tools like OpenAI's Text Classifier, GPTZero, and Copyleaks can check writing for ChatGPT, LLaMA, and other AI language model use.

- AI-generated content that have been passed through paraphrasing tools may not be reliably detected by standard AI content detectors. [1] X Research source

- ChatGPT often produces writing that looks "perfect" on the surface but contains false information.

- Some signs that ChatGPT did the writing: A lack of descriptive language, words like "firstly" and "secondly," and sentences that look right but don't make sense.

- AI writing may lack a consistent tone, style, or perspective throughout a piece.

- AI-generated text usually lacks authentic personal experiences or specific real-world examples.

- AI may struggle with nuanced cultural or contextual references that a human writer would naturally include.

- The detection tool compares a piece of writing to similar content, decides how predictable the text is, and labels the text as either human or AI-generated.

- These tools also look for other indicators, or "signatures" that are associated with AI-generated text, such as word choice and patterns. [2] X Research source

- If an AI detection tool reports that a piece of writing was mostly AI-generated, don't rely on that report alone. It's best to only use AI detection tools if you've already found other signs that the writing was written by ChatGPT. [4] X Research source

- Running a piece of writing through multiple AI detection tools can help you get an idea of how different tools work. It can also help you narrow down false-negatives and false-positives.

- If you're evaluating a piece of writing for potential AI use, try searching the web for a few facts from the text. Try to search for facts that are easy to verify—e.g., dates and specific events.

Tyrone Showers

Spotting AI-written text on your own can be a real challenge. Look for grammatically correct but robotic language lacking humor and personal touches. A writer's sudden shift towards perfect language can also indicate AI involvement. Remember, human writing can share these traits, so considering the context is crucial.

- For example, if you're evaluating a cover letter for AI use, you might tell ChatGPT, "Write me a cover letter for a junior developer position at Company X. Explain that I graduated from Rutgers with a Computer Science degree, love JavaScript and Ruby, and have been working as a barista for the past year."

- Because ChatGPT is conversational, you can continue providing more context. For example, "add something to the cover letter about not jumping right into the industry after college because of the pandemic."

Community Q&A

- Cornell researchers determined that humans incorrectly found AI-generated news articles credible more than 60% of the time. [13] X Research source Thanks Helpful 1 Not Helpful 0

- If you're using a ChatGPT detection tool that identified writing as AI-written, consider that it may be a false positive before approaching the situation with the writer. Thanks Helpful 2 Not Helpful 0

- If you suspect ChatGPT wrote something but can't tell for sure, have a conversation with the writer. Don't accuse them of using ChatGPT—instead, ask them more questions about the writing or content to make their knowledge lines up with the content. You may also want to ask them about their writing process to see if they admit to using ChatGPT or other AI writing tools. Thanks Helpful 1 Not Helpful 0

You Might Also Like

- ↑ https://proceedings.neurips.cc/paper_files/paper/2023/hash/575c450013d0e99e4b0ecf82bd1afaa4-Abstract-Conference.html

- ↑ https://www.turnitin.com/blog/ai-writing-the-challenge-and-opportunity-in-front-of-education-now

- ↑ https://www.turnitin.com/blog/understanding-false-positives-within-our-ai-writing-detection-capabilities

- ↑ https://help.openai.com/en/collections/5929286-educator-faq

- ↑ https://www.npr.org/2023/01/09/1147549845/gptzero-ai-chatgpt-edward-tian-plagiarism

- ↑ https://app.gptzero.me/app/subscription-plans

- ↑ https://contentatscale.ai/ai-content-detector/

- ↑ https://copyleaks.com/api-pricing

- ↑ https://research.google/pubs/pub51844/

- ↑ https://help.openai.com/en/articles/6783457-what-is-chatgpt

- ↑ https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9939079/

- ↑ https://www.technologyreview.com/2022/12/19/1065596/how-to-spot-ai-generated-text/

About This Article

- Send fan mail to authors

Is this article up to date?

Featured Articles

Trending Articles

Watch Articles

- Terms of Use

- Privacy Policy

- Do Not Sell or Share My Info

- Not Selling Info

wikiHow Tech Help Pro:

Level up your tech skills and stay ahead of the curve

- Skip to main content

- Keyboard shortcuts for audio player

A college student created an app that can tell whether AI wrote an essay

Emma Bowman

GPTZero in action: The bot correctly detected AI-written text. The writing sample that was submitted? ChatGPT's attempt at "an essay on the ethics of AI plagiarism that could pass a ChatGPT detector tool." GPTZero.me/Screenshot by NPR hide caption

GPTZero in action: The bot correctly detected AI-written text. The writing sample that was submitted? ChatGPT's attempt at "an essay on the ethics of AI plagiarism that could pass a ChatGPT detector tool."

Teachers worried about students turning in essays written by a popular artificial intelligence chatbot now have a new tool of their own.

Edward Tian, a 22-year-old senior at Princeton University, has built an app to detect whether text is written by ChatGPT, the viral chatbot that's sparked fears over its potential for unethical uses in academia.

Edward Tian, a 22-year-old computer science student at Princeton, created an app that detects essays written by the impressive AI-powered language model known as ChatGPT. Edward Tian hide caption

Edward Tian, a 22-year-old computer science student at Princeton, created an app that detects essays written by the impressive AI-powered language model known as ChatGPT.

Tian, a computer science major who is minoring in journalism, spent part of his winter break creating GPTZero, which he said can "quickly and efficiently" decipher whether a human or ChatGPT authored an essay.

His motivation to create the bot was to fight what he sees as an increase in AI plagiarism. Since the release of ChatGPT in late November, there have been reports of students using the breakthrough language model to pass off AI-written assignments as their own.

"there's so much chatgpt hype going around. is this and that written by AI? we as humans deserve to know!" Tian wrote in a tweet introducing GPTZero.

Tian said many teachers have reached out to him after he released his bot online on Jan. 2, telling him about the positive results they've seen from testing it.

More than 30,000 people had tried out GPTZero within a week of its launch. It was so popular that the app crashed. Streamlit, the free platform that hosts GPTZero, has since stepped in to support Tian with more memory and resources to handle the web traffic.

How GPTZero works

To determine whether an excerpt is written by a bot, GPTZero uses two indicators: "perplexity" and "burstiness." Perplexity measures the complexity of text; if GPTZero is perplexed by the text, then it has a high complexity and it's more likely to be human-written. However, if the text is more familiar to the bot — because it's been trained on such data — then it will have low complexity and therefore is more likely to be AI-generated.

Separately, burstiness compares the variations of sentences. Humans tend to write with greater burstiness, for example, with some longer or complex sentences alongside shorter ones. AI sentences tend to be more uniform.

In a demonstration video, Tian compared the app's analysis of a story in The New Yorker and a LinkedIn post written by ChatGPT. It successfully distinguished writing by a human versus AI.

A new AI chatbot might do your homework for you. But it's still not an A+ student

Tian acknowledged that his bot isn't foolproof, as some users have reported when putting it to the test. He said he's still working to improve the model's accuracy.

But by designing an app that sheds some light on what separates human from AI, the tool helps work toward a core mission for Tian: bringing transparency to AI.

"For so long, AI has been a black box where we really don't know what's going on inside," he said. "And with GPTZero, I wanted to start pushing back and fighting against that."

The quest to curb AI plagiarism

Untangling Disinformation

Ai-generated fake faces have become a hallmark of online influence operations.

The college senior isn't alone in the race to rein in AI plagiarism and forgery. OpenAI, the developer of ChatGPT, has signaled a commitment to preventing AI plagiarism and other nefarious applications. Last month, Scott Aaronson, a researcher currently focusing on AI safety at OpenAI, revealed that the company has been working on a way to "watermark" GPT-generated text with an "unnoticeable secret signal" to identify its source.

The open-source AI community Hugging Face has put out a tool to detect whether text was created by GPT-2, an earlier version of the AI model used to make ChatGPT. A philosophy professor in South Carolina who happened to know about the tool said he used it to catch a student submitting AI-written work.

The New York City education department said on Thursday that it's blocking access to ChatGPT on school networks and devices over concerns about its "negative impacts on student learning, and concerns regarding the safety and accuracy of content."

Tian is not opposed to the use of AI tools like ChatGPT.

GPTZero is "not meant to be a tool to stop these technologies from being used," he said. "But with any new technologies, we need to be able to adopt it responsibly and we need to have safeguards."

Human Writer or AI? Scholars Build a Detection Tool

DetectGPT can determine with up to 95% accuracy whether a large language model wrote that essay or social media post.

The launch of OpenAI’s ChatGPT , with its remarkably coherent responses to questions or prompts, catapulted large language models (LLMs) and their capabilities into the public consciousness. Headlines captured both excitement and cause for concern: Can it write a cover letter? Allow people to communicate in a new language? Help students cheat on a test? Influence voters across social media? Put writers out of a job?

Now with similar models coming out of Google, Meta, and more, researchers are calling for more oversight.

“We need a new level of infrastructure and tools to provide guardrails around these models,” says Eric Anthony Mitchell , a fourth-year computer science graduate student at Stanford University whose PhD research is focused on developing such an infrastructure.

One key guardrail would provide teachers, journalists, and citizens a way to know when they are reading text generated by an LLM rather than a human. To that end, Mitchell and his colleagues have developed DetectGPT, released as a demo and a paper last week, which distinguishes between human- and LLM-generated text. In initial experiments, the tool accurately identifies authorship 95% of the time across five popular open-source LLMs.

While the tool is in its early stages, Mitchell hopes to improve it to the point that it can benefit society.

“The research and deployment of these language models is moving quickly,” says Chelsea Finn , assistant professor of computer science and of electrical engineering at Stanford University and one of Mitchell’s advisors. “The general public needs more tools for knowing when we are reading model-generated text.”

An Intuition

Barely two months ago, fellow graduate student and co-author Alexander Khazatsky texted Mitchell to ask: Do you think there’s a way to classify whether an essay was written by ChatGPT? It set Mitchell thinking.

Researchers had already tried several general approaches to mixed effect. One – an approach used by OpenAI itself – involves training a model with both human- and LLM-generated text and then asking it to classify whether another text was written by a human or an LLM. But, Mitchell thought, to be successful across multiple subject areas and languages, this approach would require a huge amount of data for training.

Read the full study, DetectGPT: Zero-Shot Machine-Generated Text Detection using Probability Curvature

A second existing approach avoids training a new model and simply uses the LLM that likely generated the text to detect its own outputs. In essence, this approach asks an LLM how much it “likes” a text sample, Mitchell says. And by “like,” he doesn’t mean this is a sentient model that has preferences. Rather, a model’s “liking” of a piece of text is a shorthand way to say “scores highly,” and it involves a single number: the probability of that specific sequence of words appearing together, according to the model. “If it likes it a lot, it’s probably from the model. If it doesn’t, it’s not from the model.” And this approach works reasonably well, Mitchell says. “It does much better than random guessing.”

But as Mitchell pondered Khazatsky’s question, he had the initial intuition that because even powerful LLMs have subtle, arbitrary biases for using one phrasing of an idea over another, the LLM will tend to “like” any slight rephrasing of its own outputs less than the original. By contrast, even when an LLM “likes” a piece of human-generated text, meaning it gives it a high probability rating, the model’s evaluation of slightly modified versions of that text would be much more varied. “If we perturb a human-generated text, it’s roughly equally likely that the model will like it more or less than the original.”

Mitchell also realized that his intuition could be tested using popular open-source models including those available through OpenAI’s API. “Calculating how much a model likes a particular piece of text is basically how these models are trained,” Mitchell says. “They give us this number automatically, which turns out to be really useful.”

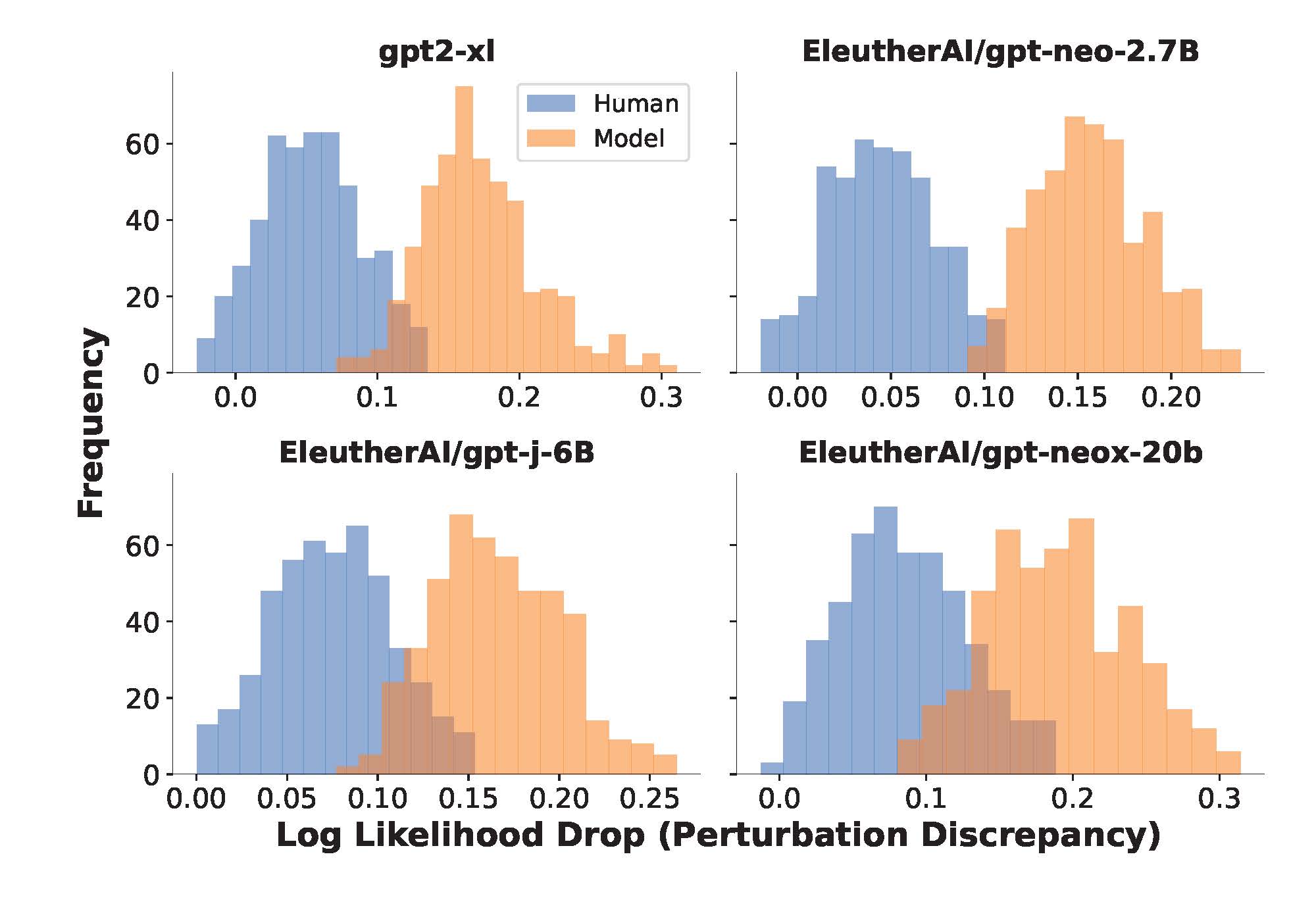

Testing the Intuition

To test Mitchell’s idea, he and his colleagues ran experiments in which they evaluated how much various publicly available LLMs liked human-generated text as well as their own LLM-generated text, including fake news articles, creative writing, and academic essays. They also evaluated how much the LLMs, on average, liked 100 perturbations of each LLM- and human-generated text. When the team plotted the difference between these two numbers for LLM- compared to human-generated texts, they saw two bell curves that barely overlapped. “We can discriminate between the source of the texts pretty well using that single number,” Mitchell says. “We’re getting a much more robust result compared with methods that simply measure how much the model likes the original text.”

Each plot shows the distribution of the perturbation discrepancy for human-written news articles and machine-generated articles. The average drop in log probability (perturbation discrepancy) after rephrasing a passage is consistently higher for model-generated passages than for human-written passages.

In the team’s initial experiments, DetectGPT successfully classified human- vs. LLM-generated text 95% of the time when using GPT3-NeoX, a powerful open-source variant of OpenAI’s GPT models. DetectGPT was also capable of detecting human- vs. LLM-generated text using LLMs other than the original source model, but with slightly less confidence. (As of this time, ChatGPT is not publicly available to test directly.)

More Interest in Detection

Other organizations are also looking at ways to identify AI-written text. In fact, OpenAI released its new text classifier last week and reports that it correctly identifies AI-written text 26% of the time and incorrectly classifies human-written text as AI-written 9% of the time.

Mitchell is reluctant to directly compare the OpenAI results with those of DetectGPT because there is no standardized dataset for evaluation. But his team did run some experiments using OpenAI’s previous generation pre-trained AI detector and found that it worked well on English news articles, performed poorly on PubMed articles, and failed completely on German language news articles. These kinds of mixed results are common for models that depend on pre-training, he says. By contrast, DetectGPT worked out of the box for all three of these domains.

Evading Detection

Although the DetectGPT demo has been publicly available for only about a week, the feedback has already been helpful in identifying some vulnerabilities, Mitchell says. For example, a person can strategically design a ChatGPT prompt to evade detection, such as by asking the LLM to speak idiosyncratically or in ways that seem more human. The team has some ideas for how to mitigate this problem, but hasn’t tested them yet.

Another concern is that students using LLMs like ChatGPT to cheat on assignments will simply edit the AI-generated text to evade detection. Mitchell and his team explored this possibility in their work, finding that although there is a decline in the quality of detection for edited essays, the system still did a pretty good job of spotting machine-generated text when fewer than 10-15% of the words had been modified.

In the long run, Mitchell says, the goal is to provide the public with a reliable, actionable prediction as to whether a text – or even a portion of a text – was machine generated. “Even if a model doesn’t think an entire essay or news article was written by a machine, you’d want a tool that can highlight a paragraph or sentence that looks particularly machine-crafted,” he says.

To be clear, Mitchell believes there are plenty of legitimate use cases for LLMs in education, journalism, and elsewhere. However, he says, “giving teachers, newsreaders, and society in general the tools to verify the source of the information they’re consuming has always been useful, and remains so even in the AI era."

Building Guardrails for LLMs

DetectGPT is only one of several guardrails that Mitchell is building for LLMs. In the past year he also published several approaches for editing LLMs , as well as a strategy called “ self-destructing models ” that disables an LLM when someone tries to use it for nefarious purposes.

Before completing his PhD, Mitchell hopes to refine each of these strategies at least one more time. But right now, Mitchell is grateful for the intuition he had in December. “ In science, it’s rare that your first idea works as well as DetectGPT seems to. I’m happy to admit that we got a bit lucky!"

Stanford HAI’s mission is to advance AI research, education, policy and practice to improve the human condition. Learn more

More News Topics

ChatGPT Detector

How does the chatgpt detector work.

Our free AI detection tool is designed to identify whether a piece of text is written by a human or generated by an AI language model. This advanced tool functions effectively on various levels - analyzing sentences, paragraphs, and entire documents to make its determination as whether writing should be flagged as AI content.

The core of its detection functionality lies in its specialized model, which has been meticulously trained on a vast and varied collection of texts, both human-written and AI-generated, focusing predominantly on English prose.

One of the key strengths of our AI checker is its versatility and robustness across a range of language models - it's not just for ChatGPT! It also detects writing from well-known models like Claude, Bard, Grok, GPT-4, GPT-3, GPT-2, LLaMA, and other services. Our detection tool technology is consistently updated and improved to stay ahead in detection accuracy.

The testing process of our detection algorithm is rigorous and thorough. We assess our models using a vast, never-before-seen set of human and AI-generated articles from our extensive dataset. This is supplemented by a smaller set of challenging articles that fall outside its training distribution.

In summary, our ChatGPT Detector tool is an advanced and constantly evolving AI content detector solution for distinguishing between human and AI-generated text.

Frequently Asked Questions

Q: How do I unlock more uses of this tool? A: If you're not logged in, you'll be able to use our AI detector once per day. Create a free account to unlock an additional run each day. For unlimited use, sign up for PromptFolder Pro.

Q: Is this tool 100% accurate? A: AI writing is a constantly evolving field, and no tool can be considered 100% accurate when it comes to determining if text was human written or AI written. You should use this tool as a starting point, not an absolute determination if content was created by ChatGPT or another AI model. Remember, no AI detector is perfect. This is a quickly moving field and AI content detection is always evolving.

Q: Does this tool work for other AI text generators like Claude, GPT-4, GPT-3, GPT-2, LLaMA, Bard, Gemini, or Grok? A: Yes, our ChatGPT detector is more of a general "AI detector." All of these AI generation tools use a similar basic premise - think autocomplete on steroids. Our free AI detector tool analyzes the text you provide to see how likely it is to be generated from any of these AI tools.

Q: Will this tool help detect plagiarism? A: Our algorithm is focused on detecting AI-generated content, not plagiarism. That said, AI's themselves are trained on human written content and have a tendency to plagiarize. So text generated by artificial intelligence frequently can also contain plagiarism.

- The Big Story

- Newsletters

- Steven Levy's Plaintext Column

- WIRED Classics from the Archive

- WIRED Insider

- WIRED Consulting

Everything You Need to Know About AI Detectors for ChatGPT

Detecting when text has been generated by tools like ChatGPT is a difficult task. Popular artificial- intelligence -detection tools, like GPTZero, may provide some guidance for users by telling them when something was written by a bot and not a human, but even specialized software is not foolproof and can spit out false positives.

As a journalist who started covering AI detection over a year ago, I wanted to curate some of WIRED’s best articles on the topic to help readers like you better understand this complicated issue.

Have even more questions about spotting outputs from ChatGPT and other chatbot tools ? Sign up for my AI Unlocked newsletter , and reach out to me directly with anything AI-related that you would like answered or want WIRED to explore more.

How to Detect AI-Generated Text, According to Researchers

February 2023 by Reece Rogers

In this article, which was written about two months after the launch of ChatGPT, I started to grapple with the complexities of AI text detection as well as what the AI revolution might mean for writers who publish online. Edward Tian, the founder behind GPTZero , spoke with me about how his AI detector focuses on factors like text variance and randomness.

As you read, focus on the section about text watermarking: “A watermark might be able to designate certain word patterns to be off-limits for the AI text generator.” While a promising idea, the researchers I spoke with were already skeptical about its potential efficacy.

The AI Detection Arms Race Is On

September 2023 by Christopher Beam

A fantastic piece from last year’s October issue of WIRED, this article gives you an inside look into Edward Tian’s mindset as he worked to expand GPTZero’s reach and detection capabilities. The focus on how AI has impacted schoolwork is crucial.

AI text detection is top of mind for many classroom educators as they grade papers and, potentially, forgo essay assignments altogether due to students secretly using chatbots to complete homework assignments. While some students might use generative AI as a brainstorming tool, others are using it to fabricate entire assignments .

AI-Detection Startups Say Amazon Could Flag AI Books. It Doesn’t

September 2023 by Kate Knibbs

Do companies have a responsibility to flag products that might be generated by AI? Kate Knibbs investigated how potentially copyright-breaking AI-generated books were being listed for sale on Amazon , even though some startups believed the products could be spotted with special software and removed. One of the core debates about AI detection hinges on whether the potential for false positives—human-written text that’s accidentally flagged as the work of AI—outweighs the benefits of labeling algorithmically generated content.

Use of AI Is Seeping Into Academic Journals—and It’s Proving Difficult to Detect

August 2023 by Amanda Hoover

Going beyond just homework assignments, AI-generated text is appearing more in academic journals, where it is often forbidden without a proper disclosure . “AI-written papers could also draw attention away from good work by diluting the pool of scientific literature,” writes Amanda Hoover. One potential strategy for addressing this issue is for developers to build specialized detection tools that search for AI content within peer-reviewed papers.

Researchers Tested AI Watermarks—and Broke All of Them

October 2023 by Kate Knibbs

When I first spoke with researchers last February about watermarks for AI text detection, they were hopeful but cautious about the potential to imprint AI text with specific language patterns that are undetectable by human readers but obvious to detection software. Looking back, their trepidation seems well placed.

Just a half-year later, Kate Knibbs spoke with multiple sources who were smashing through AI watermarks and demonstrating their underlying weakness as a detection strategy. While not guaranteed to fail, watermarking AI text continues to be difficult to pull off.

Students Are Likely Writing Millions of Papers With AI

April 2024 by Amanda Hoover

One tool that teachers are trying to use to detect AI-generated classroom work is Turnitin , a plagiarism detection software that added AI spotting capabilities. (Turnitin is owned by Advance, the parent company of Condé Nast, which publishes WIRED.) Amanda Hoover writes, “Chechitelli says a majority of the service’s clients have opted to purchase the AI detection. But the risks of false positives and bias against English learners have led some universities to ditch the tools for now.”

AI detectors are more likely to falsely label written content from someone whose first language isn’t English as AI than that from someone who’s a native speaker. As developers continue to work on improving AI-detection algorithms, the problem of erroneous results remains a core obstacle to overcome.

Join the WIRED community to add comments.

You Might Also Like …

In your inbox: Will Knight's AI Lab explores advances in AI

Year in Review: Look back at the best—and worst—of 2024

The WIRED 101: The best products in the world right now

IMAGES

COMMENTS

More and more students are using AI tools like ChatGPT in their writing process. Our AI Checker helps educators detect AI-generated, AI-refined, and human-written content in text. Analyze the content submitted by your students to ensure that their work is actually written by them. Promote a culture of honesty and originality among your students.

GPTZero is the leading AI detector for checking whether a document was written by a large language model such as ChatGPT. GPTZero detects AI on sentence, paragraph, and document level. Our model was trained on a large, diverse corpus of human-written and AI-generated text, with a focus on English prose.

Large language models themselves can be trained to spot AI generated writing. Training the system on two sets of text --- one written by AI and the other written by people --- can theoretically teach the model to recognize and detect AI writing like ChatGPT. Researchers are also working on watermarking methods to detect AI articles and text.

There are many AI detection tools available, but the truth is, many of these tools can produce both false-positive and false-negative results in essays, articles, cover letters, and other content. Fortunately, there are still reliable ways to tell whether a piece of writing was generated by ChatGPT or written by a human.

Chat GPT is being used to write papers, news articles and even text message responses. Here's how to tell if the text you're seeing was written by AI. ... How to Detect ChatGPT Text Yourself? You can detect Chat GPT-written text using online tools like OpenAI API Key. The tool comes from Open AI, itself, the company that made Chat GPT.

We asked ChatGPT to "write an article about tools to detect if text is written by ChatGPT" and fed the results to the tools below. All of them determined—or suspected—that the text had ...

A college student made an app to detect AI-written text Some students have been using ChatGPT, a text-based bot, to do their homework for them. Now, 22-year-old Edward Tian's new app is attracting ...

Barely two months ago, fellow graduate student and co-author Alexander Khazatsky texted Mitchell to ask: Do you think there's a way to classify whether an essay was written by ChatGPT? It set Mitchell thinking. Researchers had already tried several general approaches to mixed effect.

A: AI writing is a constantly evolving field, and no tool can be considered 100% accurate when it comes to determining if text was human written or AI written. You should use this tool as a starting point, not an absolute determination if content was created by ChatGPT or another AI model. Remember, no AI detector is perfect.

AI text detection is top of mind for many classroom educators as they grade papers and, potentially, forgo essay assignments altogether due to students secretly using chatbots to complete homework ...